AI Video Editing Results (Short-Form): Descript vs Gling vs Loom vs TimeBolt

Sep 14, 2025

Last Update: October 13th, 2025

Watchability is everything in short-form video

When you’re sending a quick message, posting a reel, or sharing a clip, you’ve got only a few seconds to earn attention. If the first 15 seconds of your video stretch to 23 because of pauses, ums, and filler, that’s a 53% increase in runtime.

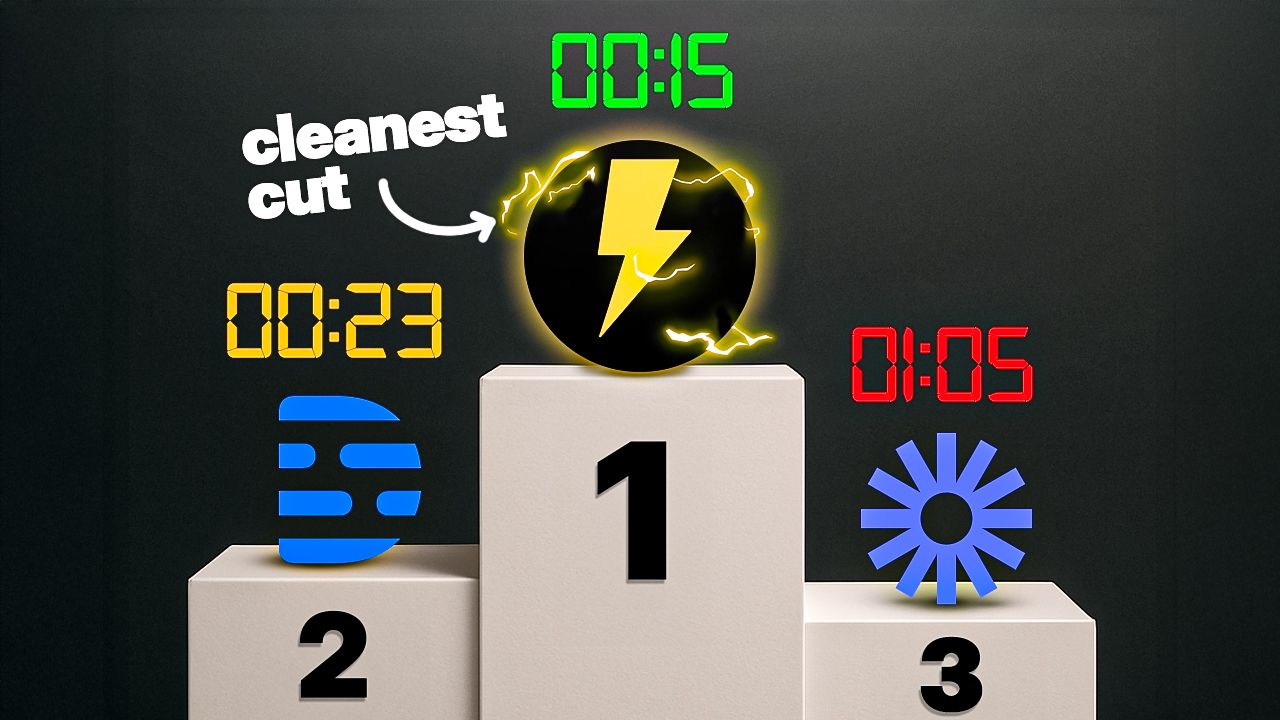

In this Part One of our AI Video Editor Showdown, we test TimeBolt, Descript, Gling, and Loom on a 93-second raw recording filled with silences and hesitations. The goal: see which tool keeps your message sharp and your audience engaged.

For Part Two (Long-Form Test: TimeBolt vs Descript vs Gling), we scaled the test to a 60-minute Zoom recording to see how small misses compound into wasted minutes and hours. In Part THREE we test CapCut vs Premiere Pro vs TimeBolt (Short and long form tests). In Part FOUR we test accuracy in removing bad takes between Descript vs Gling vs TimeBolt.

The verified results and files are presented below so others can reproduce the test.

FOR CONTEXT, HERE ARE THE PLAYERS

-

Descript has raised around $100 million from investors that include OpenAI Startup Fund, Andreessen Horowitz, Redpoint, and Spark Capital.

-

Gling is a newer AI editor built for long-form creators, focusing on podcasts and YouTube videos with automated silence and filler removal.

-

Loom Acquired by Atlassian for $975 million in 2023.

-

TimeBolt is rapid video communications software, bootstrapped since 2019, no outside funding.

Descript vs Gling vs Loom vs TimeBolt

Each editor ran its native silence and filler automations. We then exported the resulting JSON timelines and re-verified them in TimeBolt’s reverse-timeline to measure what was missed. The data is proven by word token level SRT files of the filler and silence each platform failed to detect or remove.

Here are the test results side-by-side.

This update adds Gling’s verified JSON output (22 seconds total, 7 seconds waste), analyzed through the same reverse-timeline method used for the other tools. All tests were performed with identical input, and Gling’s Bad Takes feature remained OFF for consistency.

*Data Verification: Each editor’s output was converted to JSON and re-evaluated in TimeBolt’s waveform timeline to ensure all silence and filler detections were measured accurately. Each tool’s JSON export was re-imported into TimeBolt’s reverse timeline to verify leftover waste time.

Test Overview

We ran a 90-second unscripted recording through four leading AI editors (TimeBolt, Descript, Gling, and Loom) using identical source video and default silence/filler settings. Each output was verified through exported JSON word tokens and playback review.

How We Tested

Each clip was processed on Mac Studio (M2 Ultra) with native app installs. Silence/filler detection used default automation settings. No manual cuts. Output SRT and JSON transcripts were compared side-by-side to calculate total waste, missed filler, and cleanup time.

All data collection and verification were performed internally using TimeBolt’s waveform timeline. No human editing or bias adjustment was applied.

Executive Summary

TimeBolt remains the only editor to achieve zero-repair output on both short- and long-form tests. Descript and Gling each left measurable filler and silence requiring manual fixes, while Loom lacked an editing layer altogether. Across tests, TimeBolt averaged 4–10× faster total time-to-publish.

| Tool | Output | Filler Missed | Silence Missed | Cleanup Time | Result |

|---|---|---|---|---|---|

| TimeBolt | 14 s | 0 | 0 s | 0 min | Clean baseline — zero repair required. |

| Descript | 22 s | 11 words | 15 s | ≈4–5 min | Multiple fillers left; needs manual cleanup. |

| Gling | 21 s | 12 words | 16 s | ≈10.7 min | Better than Descript, but still manual rework. |

| Loom | 55 s | 4 words | 36 s | — | No timeline editor — missed filler can’t be fixed. |

-

TimeBolt cut the video to 14 seconds in one pass with zero filler and zero silence left, requiring no manual cleanup.

-

Descript removed most pauses but left 11 fillers and roughly 22 seconds of waste, taking an estimated 4–5 minutes to repair manually.

-

Gling performed slightly better on filler detection but still left 12 fillers and 16 seconds of silence, resulting in about 10 minutes of extra editing.

-

Loom produced the longest output (55 seconds) and offers no way to repair missed sections since it lacks a timeline editor.

Short-form AI editors promise effortless speed. But TimeBolt remains the only tool to cut video faster than you record it, with zero repair required. Others still leave filler, silence, and post-fixing that defeat the promise of automation.

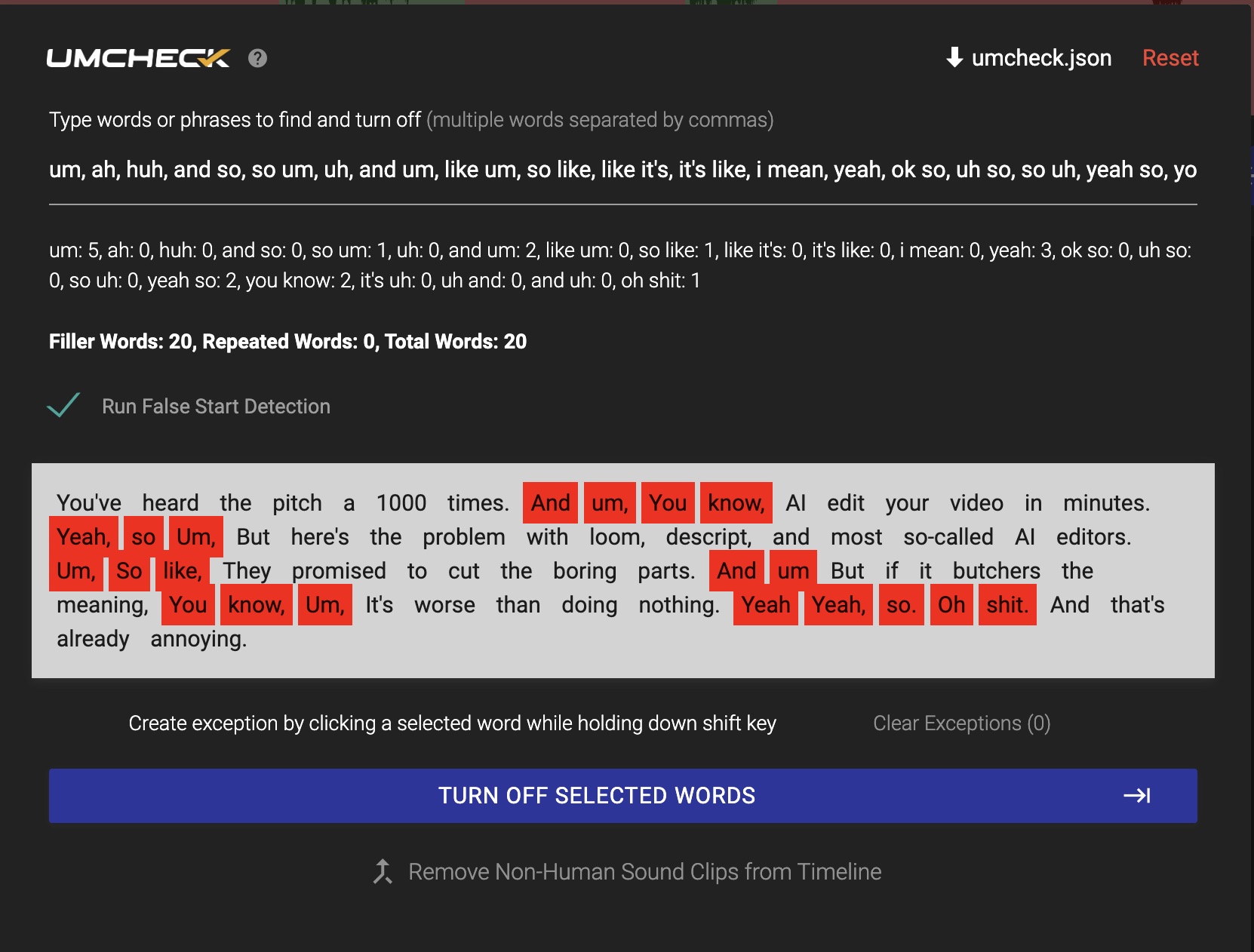

Baseline: Raw Video

- Length: 93 seconds

- Dead air (defined as pauses longer than 0.5 seconds): 75.6 seconds

- Filler words: 20

Filler words included common hesitations such as “um,” “yeah,” “so,” “you know,” as well as combinations like “and um” and “oh shit.” These counts were confirmed using Timebolt’s Umcheck analysis tool.

The Script

“You’ve heard the pitch a thousand times.

AI edits your video in minutes.

But here’s the problem with Loom and most so-called AI editors.

They promise to cut the boring parts.

But if it butchers the meaning, it’s worse than doing nothing.

And that’s already annoying.”

Timebolt Results

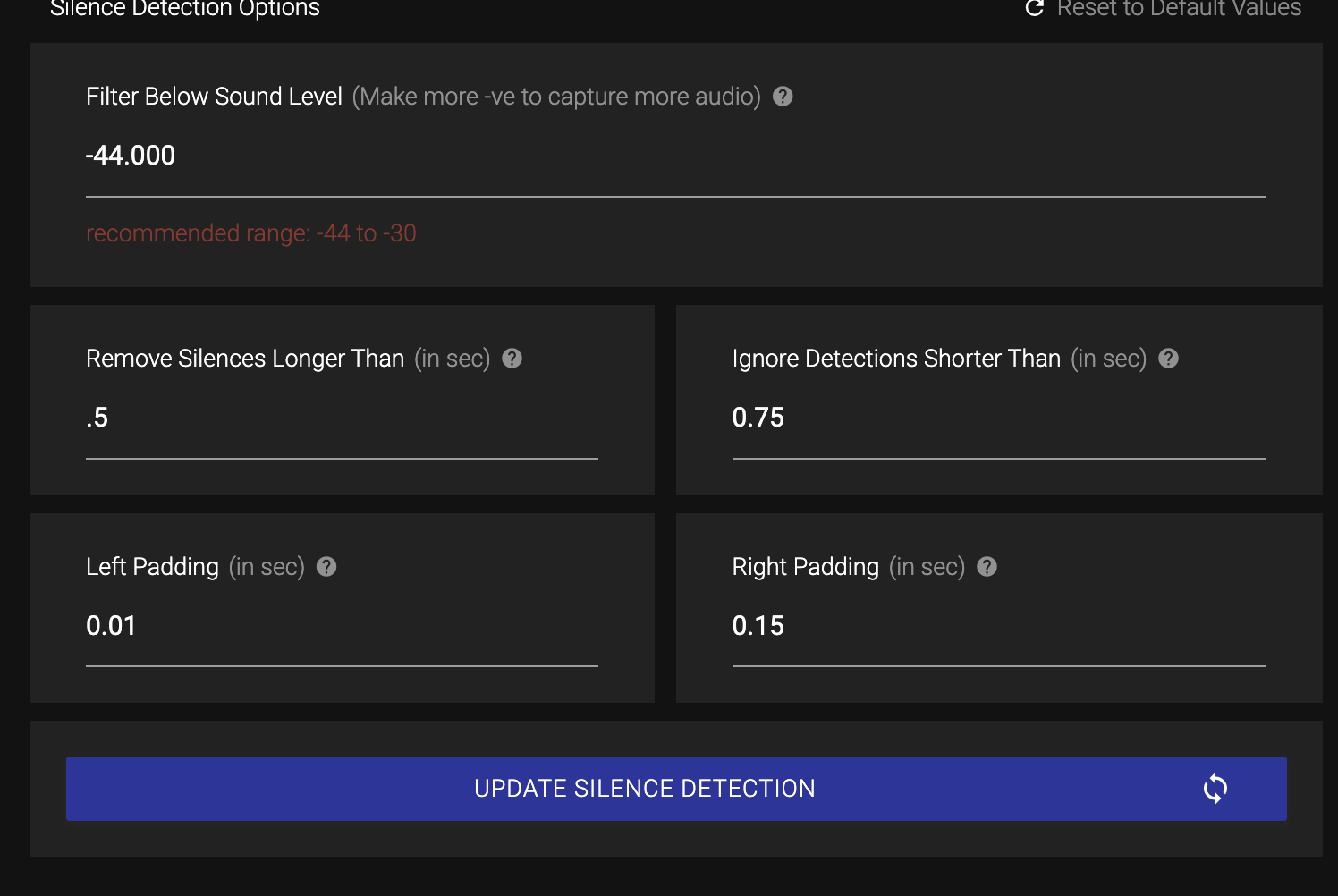

- Settings: remove silences ≥0.5 seconds, ignore <0.75 seconds, 0.01-second left padding, 0.15-second right padding

- Final video length: approximately 14.9 seconds

- Dead air missed: 0.7 seconds

- Filler words missed: 0

Timebolt removed all identified filler words and nearly all silences, producing an output that closely matched the intended edit. Meaning, you can send a Banger faster than it takes to record live.

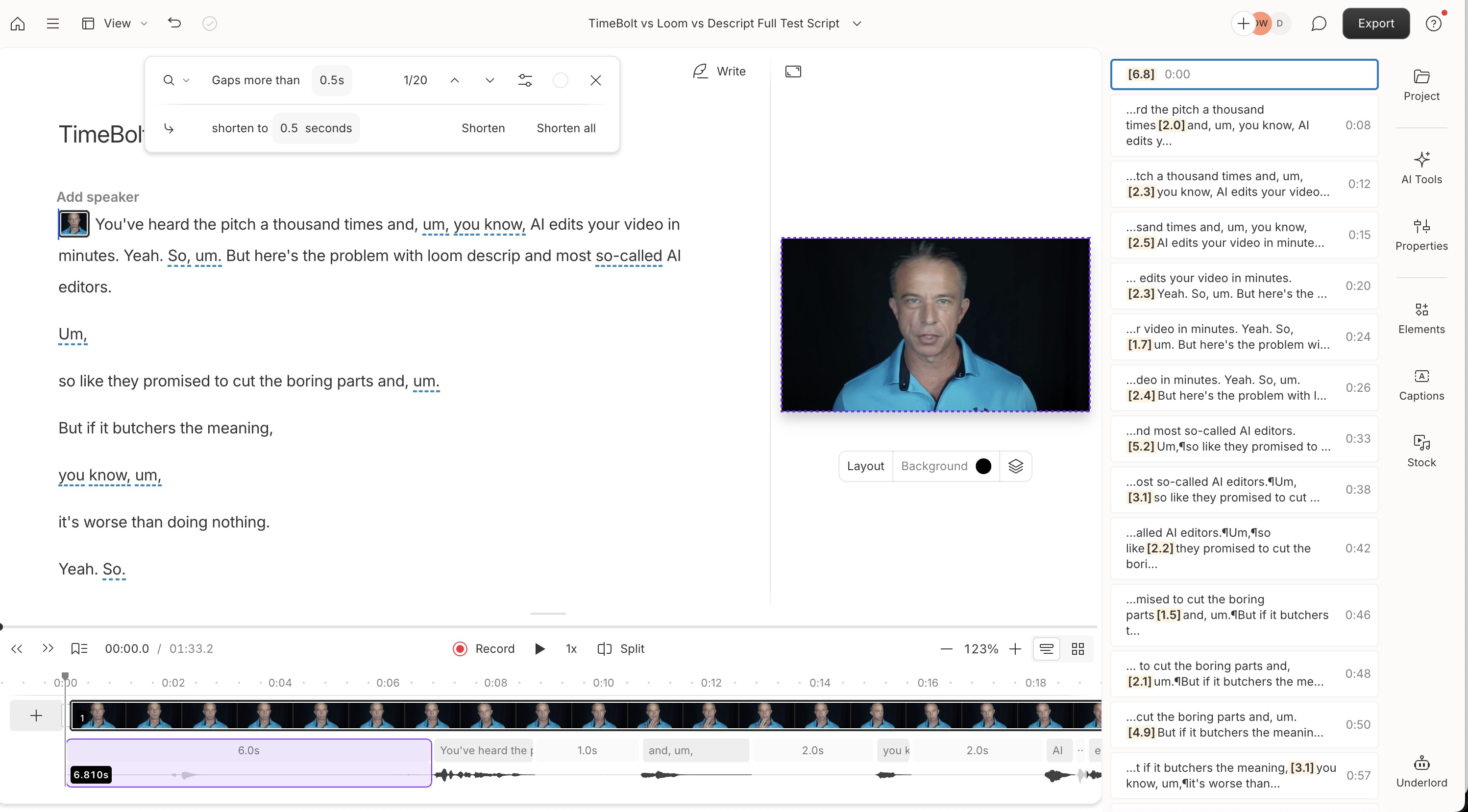

Descript Results

- Settings: Remove all filler words (with 'Avoid Harsh Cuts' turned off), and remove gaps greater than 0.5 seconds, shortening them to 0.5 seconds.

- Final video length: Approximately 23.2 seconds.

- Dead air missed: Around 3.8 seconds of explicit gaps, with an effective total of approximately 6.9 seconds when including the durations of retained fillers. This is lower than our reported 9 seconds, suggesting our study included internal pauses or used a slightly different threshold.

- Filler words missed: 12 (e.g., "and," "uh," "Yeah," "So," "like," "oh shit"). This is higher than our reported 10, showing incomplete removal despite the aggressive settings.

Descript handles silences reasonably well but retains more fillers than expected, possibly due to its audio detection limits without manual tweaks. The extra length compared to Timebolt comes from these retained elements and short unremoved pauses.

Loom Results

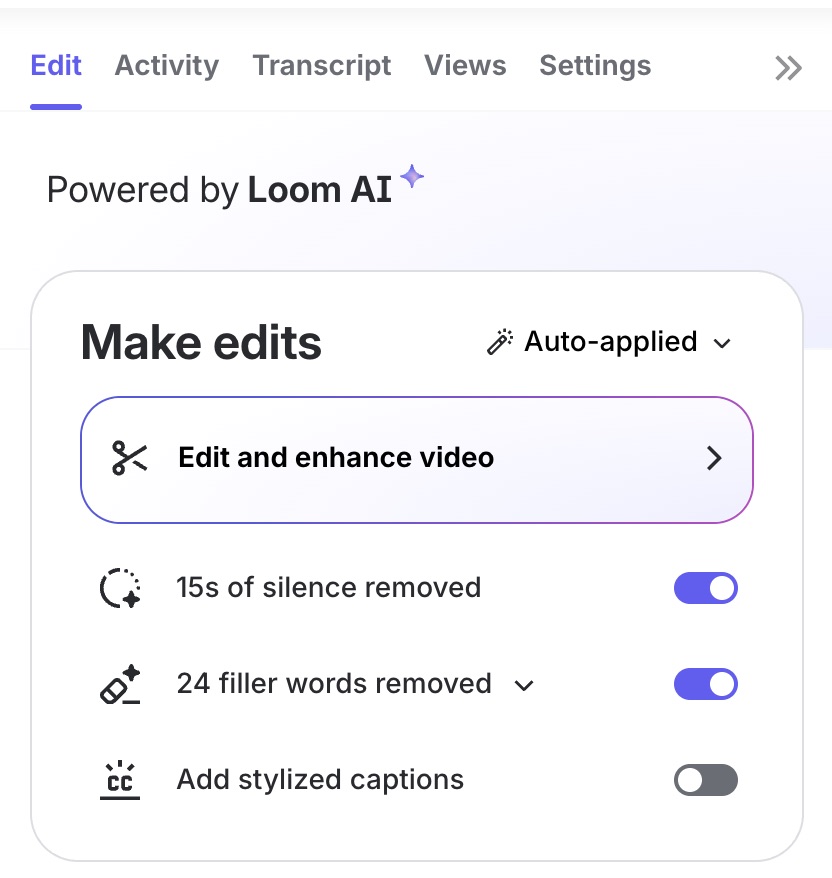

- Settings: Loom offers no fine-tuning options, so we used its defaults: Remove silences (reported 15 seconds removed) and remove fillers (reported 24 removed).

- Final duration: 65 seconds

- Dead air missed: 35 seconds, nearly 1/2 the video

- Fillers words missed: 6 (e.g., "And," "So," "Yeah.," "Oh shit."). Loom reported removing 24, which overcounts the raw 20, likely flagging non-fillers like "and" as repeats.

Loom’s counters seem off: over-removing fillers with false positives while missing significant silence. The retention of "Oh shit" (listed as a filler) suggests Loom’s detection skips custom phrases, focusing only on basics like "um" or "ah."

Gling (New Test)

Output: 21 seconds from a 90-second original

Missed filler: 13 words

Missed silence: 16 seconds

Total waste: ~24 seconds

Estimated cleanup time: ~10.7 minutes

Settings: No fine-tuning options, so we used its defaults: Remove silences and remove fillers. As shown here:

Result: Slightly better filler detection than Descript but still left over a dozen verbal tics, and multiple uncut pauses. Requires full re-review and manual timeline repair to reach TimeBolt baseline.

Efficiency Impact

When comparing short-form edits, percentages matter more than raw seconds. Using Timebolt’s 14.9-second output as the baseline, extrapolating proportionally for a 1-hour raw video (~3,600s, typical for unscripted commentary) or 2 hours shows how this scales into a real time problem.

Assumptions: Tools maintain similar removal ratios (real results vary by content, but this illustrates the filler burden).

| Tool | Output | Filler Missed | Silence Missed | Cleanup Time | Efficiency Impact |

|---|---|---|---|---|---|

| TimeBolt | 42:55 | 0 | 0 s | 0 min | Perfect one-pass edit — no rework needed. |

| Descript | 47:54 | 448 words | 171 s | ≈52 min | Adds ~1 hour per project fixing missed filler and pauses. |

| Gling | 46:18 | 607 words | 70 s | ≈174 min | Over 3 hours of cleanup time from inaccurate cuts. |

| CapCut | 46:43 | 721 words | 80 s | ≈206 min | ~3.5 hours of manual timeline correction per hour of footage. |

| Loom | 57:47 | 357 words | 528 s | — | Cannot repair — missed silence remains in final video. |

Reproducibility

To allow others to confirm or challenge these results, we are providing the materials used:

- Download Raw Test Script Video

- Raw Word-Level Data (Umcheck JSON): The JSON array provided, with start/end timestamps for each word.

- Timebolt SRT Output The JSON array from our processed file.

- Descript SRT Output / Filler Only SRT

- Loom SRT Output / Filler Only SRT

- Gling SRT OutPut / Filler Only SRT

- TimeBolt Silence Detection Settings: Remove silence longer than .5, Ignore Detections Shorter than .75 sec, Left Padding .01, Right Padding .15

- Descript Detection Settings: Selected Remove All Filler words (Avoid Harsh Cuts - turned off), Remove Gaps greater than .5, Shorten to .5

- Loom Detection Settings: Unable to fine-tune settings. Remove Silences on = reported 15sec, Remove Filler On, shows ’24 Filler Words removed

- Gling Detection Settings: Unable to fine-tune

Filler Words Measured:

um, ah, huh, and so, so um, uh, and um, like um, so like, like it's, it's like, i mean, yeah, ok so, uh so, so uh, yeah so, you know, it's uh, uh and, and uh, oh shit

Anyone can repeat the test with these files. If your results differ, we encourage you to share them so the comparison can be refined further.

Conclusion

The independent review confirms that Timebolt offers the most accurate automated cleanup among the tools tested. Descript provides a strong alternative, especially for users who prefer to fine-tune their edits manually. Loom remains a convenient option for simple screen recordings but does not provide the same level of precision.

This verification strengthens our original conclusion: for creators seeking reliable automated editing, Timebolt delivers the most consistent results.

Disclaimer: The results of this study are based on tests conducted and verified as of September 14, 2025. Technology and software, including Timebolt, Descript, and Loom, may change over time, potentially affecting performance. We encourage users to test these tools with the latest versions and share their findings for ongoing accuracy.

Update: October 2025:

Gling’s short-form results were re-tested with Bad Takes turned OFF and verified using JSON data parsed through TimeBolt’s reverse-timeline analysis. This update confirms that Gling’s 22-second output contained approximately 7 seconds of uncut silence across ~55 missed regions. The JSON verification aligns Gling’s eval w/ the same standard used for Descript and Loom, ensuring all tools were tested on identical inputs and measured through TimeBolt’s waveform-based accuracy model.