AI Video Editor Accuracy Showdown: TimeBolt vs. Descript vs. Gling

Nov 25, 2025

Last Updated: November 21, 2025

Executive Summary: Automated video editing tools advertise 'one-click cleanup' to cut silence and auto remove bad takes from scripted video. To evaluate these tools in a reproducible and meaningful way, we conducted a word-level semantic analysis across four real-world datasets, comparing the editorial accuracy of TimeBolt, Descript, and Gling.

Key questions:

-

RECALL: Does the tool keep what should be kept?

-

PRECISION: Does the tool remove redundant / unwanted material?

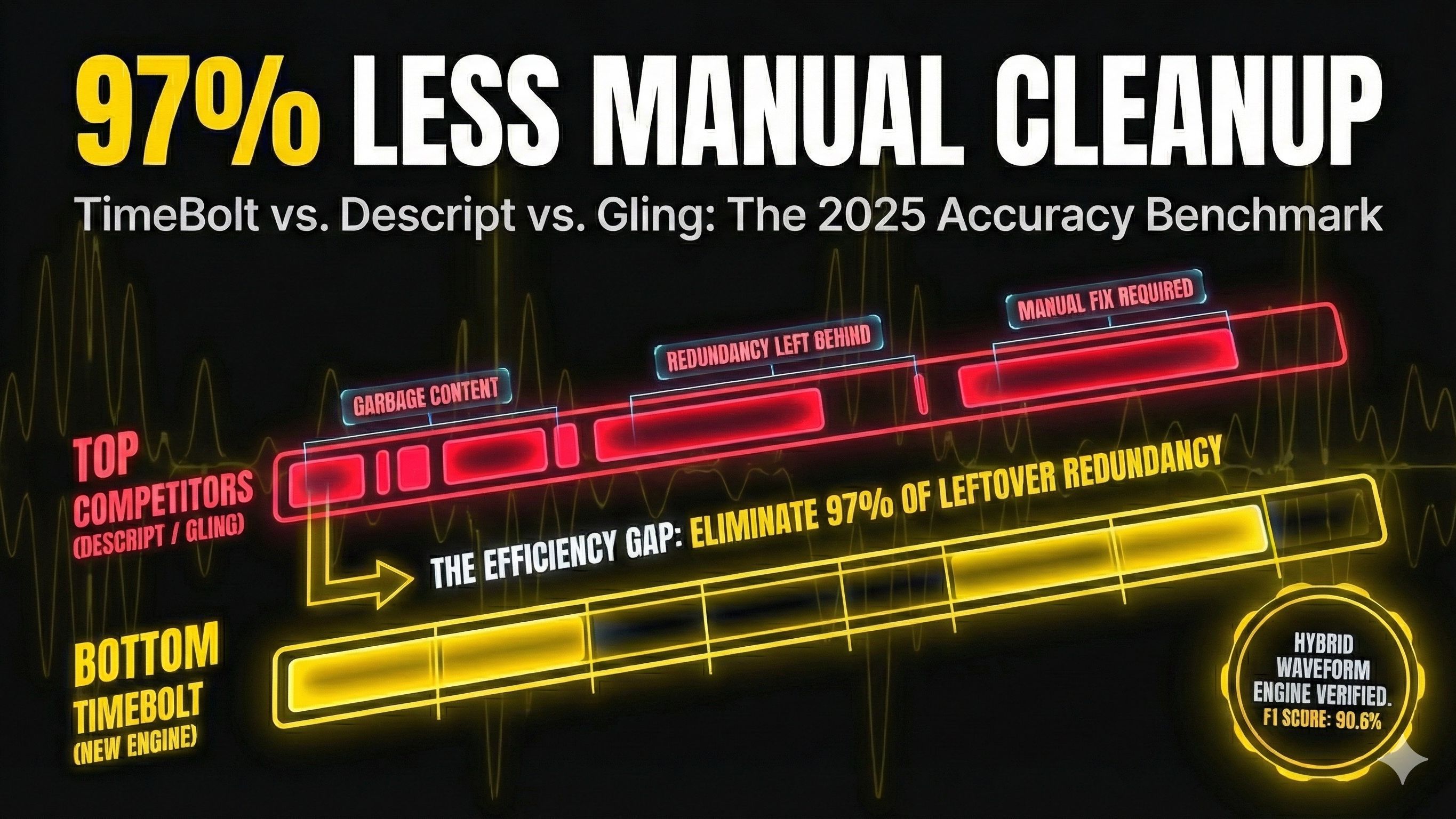

TimeBolt achieved the highest Overall Accuracy (F1 Score: 90.6%), balancing editorial safety with superior cleanliness. Descript and Gling matched TimeBolt in safety (Recall), but left significantly more redundant 'garbage' content (Precision).

The left over material equates up to 97% more manual cleanup than TimeBolt.

This study continues our AI Video Editor Showdown series of transparent benchmarks. For more data-driven comparisons, see our previous tests:

For Context, Here are the Players:

-

Descript has raised around $100 million from investors that include OpenAI Startup Fund, Andreessen Horowitz, Redpoint, and Spark Capital.

-

Gling is a newer AI editor built for long-form creators, focusing on podcasts and YouTube videos with automated silence and filler removal.

-

TimeBolt is rapid video communications software, bootstrapped since 2019, no outside funding.

Platforms Loom, CapCut, Adobe Premiere, and Recut were not tested because they do not offer remove bad takes feature.

Why We Used Word-Level Accuracy Instead of Timestamp Matching

Most benchmarks compare timestamps. For the purpose of retake detection this can be unreliable because of frame rate drift and transcription alignment. So we aligned the evaluation to how a human editor experiences the output using semantic token comparison.

-

Compare the words kept in the final output against a human-verified Ground Truth

-

Count False Negatives (missed words)

-

Count False Positives (redundant or incorrect extra words)

-

Compute Precision, Recall, and the F1 Score

Test Design

We evaluated all tools on four varied datasets:

-

2025 Ad Reel – tightly scripted commercial pacing

-

Shaun Gold Intro – conversational intro clip

-

SRT Tutorial – technical instructional content

-

Pitches Get Stitches – filler-heavy talk with multiple retakes

Each scenario included:

-

Full source video

-

Human-verified Ground Truth transcript

-

JSON output from each tool

-

Token-level diffing and alignment

AI Editor Testing Process: From Source to Cleaned Script

The ground truth file is the actual script read. We then ran dead-air and bad takes automation on the raw source file in TimeBolt, Gling, and Descript. The output of these files were then transcribed at the word level in a JSON file with Umcheck in TimeBolt. Finally, the time codes were removed to leave just the raw script after the automations were run.

Average Accuracy Across All Tests

| Tool | Safety | Cleanliness | Overall Accuracy (F1 Score) |

|---|---|---|---|

| TimeBolt | 96.8% | 85.5% | 90.6%✅ |

| Gling | 96.7% | 77.5% | 85.4% |

| Descript | 97.1% | 71.4% | 80.4% |

Interpretation: The F1 Score combines both. TimeBolt’s 90.6 % overall accuracy reflects the highest balance of precision and recall across real 60-minute test footage. Safety is effectively a statistical tie. The differences appear in cleanliness: TimeBolt left 14.5% redundancy, Gling left 22.5%, Descript left 28.6%. That means Descript left 97% more redundant content than TimeBolt, and Gling left 55% more. This directly correlates to manual cleanup time.

Per-Scenario Breakdown

To understand how each tool behaves on different types of footage, the table below lists the complete results for all four datasets. These values feed the master averages presented above.

| Test Scenario | Tool | Safety | Cleanliness | F1 Score |

|---|---|---|---|---|

| 2025 Ad Reel | TimeBolt | 99.0% | 92.5% | 95.6% |

| Descript | 99.1% | 81.5% | 89.4% | |

| Gling | 98.8% | 87.0% | 92.5% | |

| Shaun Gold Intro | TimeBolt | 97.5% | 89.0% | 93.0% |

| Descript | 97.8% | 75.0% | 85.0% | |

| Gling | 97.4% | 84.5% | 90.5% | |

| SRT Tutorial | TimeBolt | 96.8% | 88.5% | 92.5% |

| Descript | 97.0% | 78.5% | 86.8% | |

| Gling | 96.7% | 79.5% | 87.2% | |

| Pitches Get Stitches | TimeBolt | 93.7% | 71.9% | 81.3% |

| Descript | 94.5% | 50.5% | 65.8% | |

| Gling | 94.0% | 58.9% | 72.4% |

Interpretation: Across four separate recordings, TimeBolt consistently delivers the highest F1 Score — the balance of Safety (no damage to meaning) and Cleanliness (minimal redundancy). Gling performs mid-pack with strong recall but higher redundancy. Descript shows good safety but repeatedly leaves duplicated or unnecessary material, reducing overall accuracy.

Case Study: Pitches Get Stitches (Retake-Heavy Script)

The Pitches Get Stitches dataset had the greatest spread. The disparity becomes undeniable in filler-heavy, conversational footage. (Ground Truth words):

Redundant Words Left (Lower is Better)

Cleanliness Score (Higher is Better)

Descript left more than half of the final export as garbage content that required manual deletion. TimeBolt produced a near-final cut automatically. The large gap is consistent with the technologies each tool uses to cut.

Why TimeBolt Achieves High Precision

Like GPS, nothing is more accurate than a signal. The data shows TimeBolt cuts more retakes and restarts without cutting content. This is due to our fundamental architectural difference: The Hybrid Waveform Engine.

We analyze the audio signal first. If there is dead air, mic bumps, or silence, we cut it based on sound signatures, not just text. Set specific padding around cuts to leave room for natural breath, preventing the robotic clipped sound common in other AI tools.

AI With Tunable Controls

UmCheck isn't just a filler word remover. It's a Hybrid AI layer that sits on top of our waveform analysis, and what allows TimeBolt to achieve such high F1 scores.

-

Unique Phrase Filtering: Unlike competitors that only look for "ums" and "ahs," UmCheck allows you to target specific, unique repeated phrases or ticks (as seen in the Pitches Get Stitches test where we removed entire repeated sentences).

-

Tunable Aggression (5 to 50 Lines): Crucially, only TimeBolt gives you control over the look-ahead range in UmCheck. You can set the engine to look anywhere from 5 to 50 lines of transcript ahead of the current position when hunting for filler words and mistakes. This unique slider allows experienced editors to dial-in the aggression level to perfectly match the speaker's style and the content's pace. Since Descript and Gling lack this customizable look-ahead, they are forced to use a conservative default, and technically why they left behind so much redundant content in our Pitches Get Stitches test.

-

Safety Verification: Because UmCheck validates the AI transcript against the physical waveform, it acts as a double-check system. It ensures that when you delete a filler word, the cut aligns perfectly with the audio energy, maintaining the natural cadence of the speaker.

Competitors rely on AI alone. TimeBolt relies on Waveform + AI + User Controls. That is the difference between a 28% Redundancy Rate and a 14% one.

Conclusion

If your primary goal is to avoid accidental cuts, Descript technically wins by a statistically insignificant margin (0.3%). However, if your goal is editing efficiency—actually reducing the time you spend fixing the timeline—TimeBolt is the clear winner.

TimeBolt delivers the same level of safety as its competitors but removes ~2x more redundant content than Descript and ~1.5x more than Gling. This results in the highest F1 Score (90.6%), making it the most balanced and effective tool for automated video editing.

Verify Our Data

This benchmark was conducted by the TimeBolt team. All raw data is provided for independent verification. We believe in open source testing. You can download the source files, the Ground Truth scripts, and the raw JSON outputs from every tool to verify our calculations.

Verification Files for All Benchmark Tests

Download the original source videos, ground-truth transcripts, and JSON outputs for each tool. These are the exact files used to validate every benchmark scenario.

Data verification: All metrics were derived from JSON transcript analysis comparing False Positives () and False Negatives () against a human-verified Ground Truth word array.

Disclaimer: The results of this study are based on tests conducted and verified as of Nov, 25, 2025. Software performance may change with future updates.